Volume 5 Issue 1 (2016) DOI:10.1349/PS1.1938-6060.A.458

Radio/Television/Sound:

Radio Aesthetics and Perceptual Technics in Early American Television

Luke Stadel

Media theorists and historians have long posited a genealogical connection between radio and television.1 Most commonly, this relationship has been discussed in terms of a history of broadcasting, the dominant framework within which both media were developed in an American context.2 In addition to the large number of historiographic works that stress the institutional continuity between television and radio, theories of television sound have typically centered on the assumption that the emergence of television was simply a function of adding images to the medium's blind ancestor, the inverse of the transformation from to silent to sound cinema in the 1920s.3 But as a 1950 television advertisement by Westinghouse Electric Corporation demonstrates, the relationship of radio sound to television sound is not as uncomplicated as existing models of television sound have suggested. During the two-minute spot, the company's spokesmodel emphasizes the "eighteenth-century styling" of the unit on display and touts the benefits of the company's proprietary "electronic magnifier" feature as well as the Westinghouse black glass tube, which would deliver "a better, clearer, sharper picture, day or night." In addition to the unit's allegedly superior visual capacities, it also offered superior sound via "three other kinds of entertainment right at your finger tips": AM radio, FM radio, and phonograph. In distinguishing the first two formats from one another, the spokesmodel observes, "you get wonderful static-free music when you turn [the dial] to FM; you get your favorite radio programs when you turn it to AM."4

As this example neatly illustrates, radio at the dawn of television was hardly a singular phenomenon, with the presence or absence of static marking a major dividing line between different modes of radio transmission, modes of transmission that emanated not only from the speakers of free standing radios but also from television sets. In the case of combination sets, it was entirely possible for television to function as "radio without pictures." Further, the choice of an FM subcarrier as the format for sound transmission during the establishment of technical standards for American television was based on a complex understanding of the perceptual experience of television in which achieving maximal fidelity, the goal posited by most histories of television's technological development, was not the primary goal. Recent scholarship by Michelle Hilmes, Shawn VanCour, and Philip Sewell has expanded on institutional and regulatory perspectives to give a more sufficiently historical understanding of the cultural, technological, and aesthetic connections between radio and television, but the way radio specifically influenced the development of sound norms for television remains largely unclear.5

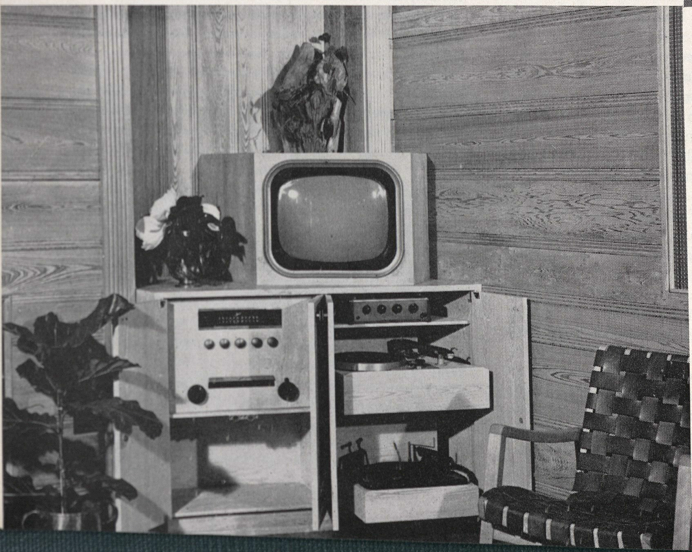

Early television sets were frequently combined with AM/FM radios and phonograph players, as in this model television arrangement submitted by a home audiophile to High Fidelity magazine in 1954

Rather than assuming that television simply illustrated the textual forms of radio, and that the identity of network-era American television can thus be essentially defined through its status as the technological, institutional, and aesthetic inheritor of radio, this article offers a historicized view of the way aesthetic standards for American television developed in relation to existing models of radio aesthetics. Specifically, this article investigates the way "flow," Raymond Williams's influential postulation of the experience of American television, has underpinned models of experience attributed to television sound, a heritage that always explicitly or implicitly relies on seeing a fundamental homology between the acts of television and radio auditorship.6 The idea of a continuously attentive televisual spectator sitting immersed before the screen, the normative viewing model posited by flow, hinges on the ability of television broadcasters, set manufacturers, technicians, and audiences to negotiate a feature central to the medium's technical aesthetics in the broadcast era: noise.

In unpacking the implicit assumptions about the relative fidelity of the television apparatus that underpin flow as an experiential model for television viewing and listening, a model in which textual organization is seen as the most important dimension of the television experience, this article posits noise as the major aesthetic influence of radio on early television. Concern for noise, the most significant aesthetic trait of AM radio (at least from a technical perspective), marked early historical discussions over the standardization of aesthetic parameters for American television, a fact that can be seen clearly in the proceedings of the National Television Systems Committee (NTSC) hearings of 1940 and 1941. Although the NTSC literally conceived of television as an additive combination of radio sounds plus moving images, with the image channel occupying one portion of the electromagnetic spectrum and sound being sent over an appended FM subcarrier channel, this equation was not marked by a straightforward assumption about either sound or image achieving maximal fidelity as individual phenomena. Rather, the NTSC conceived of television as an interrelated sensory experience, one in which sound and image were combined in such a way as to render the experience of noise, which midcentury communication theorists argued was an inescapable feature of electronic communication, below certain perceptual thresholds.

In setting maximal allowances for the presence of noise in both image and sound transmission channels, the NTSC provided a technological basis for the way television would come to be understood during the network era as what Marshall McLuhan famously called a "cool" medium, a medium marked by partial fidelity and, despite the NTSC's best intentions, significant amounts of perceptible noise at the level of viewer experience.7 However, in order to avoid the culturally problematic and ahistorical assumptions underpinning McLuhan's theory of television experience, this essay instead draws on the model of perceptual technics advanced by media theorist Jonathan Sterne. Rather than viewing television's limited or partial fidelity as the return of a "primitive" oral form of communication and an ontological feature of television as a medium, this article treats network-era television's relatively low fidelity as the product of historically specific assumptions about the way media technologies relate to human perceptual capabilities. Understanding noise as a key factor in the early development of television not only gives a new perspective on models of experience attributed to network-era American television, but also illuminates the technical factors behind the transition from "old" TV of the broadcast era to the "new" TV of the post-network era, in which high-definition image and multichannel sound are often alleged to have freed television from the technological limitations of its earlier incarnations.

The Persistence of Flow

First advanced in Williams's seminal 1974 monograph on television, the concept of flow has exerted a pervasive influence on theoretical models of television sound in which television is treated as a form of illustrated radio. According to Williams, flow was the defining characteristic of American television, the way a certain sociopolitical imperative, the need to present a continuously attentive audience for commercial advertisers, was made manifest at the level of textual form.8 In his 1986 essay "Television/Sound," Rick Altman builds on Williams's notion of textual flow to posit the complementary concept of "household flow," within which, according to Altman, television consumption typically takes place.9 Citing Nielsen data on television viewing habits, he asserts that although the average American viewer may not sit transfixed before his or her television set, as would the ideal viewer posited by Williams's model, sound nonetheless regulates the kind of constant attention that is central to the way television viewing is structured in American culture. For "important" events, whether a home run hit in a baseball game, a severe weather alert, or just the next advertising break, sound functions to keep the viewer constantly attentive to the television set, even when the set itself is not visible. Here, Altman borrows from John Ellis's observation about television's importance as a sound medium being due, in large part, to the fact that sound "radiates in all directions, whereas the view of the TV image is sometimes restricted. Direct eye contact is needed with the TV screen. Sound can be heard when screen cannot be seen. So sound is used to ensure a certain level of attention, to drag the viewer back to looking at the set."10 In grounding Ellis's claims about sound-image relations within a specific set of historical circumstances, the fact that most television viewers are not actively "watching" when they claim to be watching, Altman explains how sound sutures the American television experience, linking political economy to representational form and spectatorial engagement.11

Though Altman does not specifically invoke radio as a precedent for the sound aesthetics of television in his discussion of flow, this connection was central to Michelle Hilmes's revisitation of the subject in 2008. As she argues, Altman's lone essay on television sound and a scant few pages in Michel Chion's Audio-Vision notwithstanding, sound theory developed over the span of three decades almost exclusively in relation to the norms of cinema, and is thus fundamentally ill-suited to explain the function of sound in television.12 In contrast to models of sound theory that frame cinema viewing as the normative mode of audiovisual spectatorship, Hilmes asserts that "television owes its most basic narrative structures, program formats, genres, modes of address, and aesthetic practices not to cinema, but to radio."13 Due to television's supertextual structure, in comparison to the discrete textuality of the individual film, she observes that television is marked as a "historical legacy of enormous textual variation," a problem compounded by the "present-time transmission" and "episodic, often open-ended structure" of the average programming schedule.14 As such, Hilmes suggests that, rather than adopting outright the models of auditorship used in film sound scholarship, television scholars should emphasize the particular conditions of the television event, such as the use of buffer music between shows, leading her to describe the overall sound aesthetic of American television as being characterized by "streaming seriality."15 But despite her acknowledgement that flow underpins previous theories of television sound in a way that gives an overly essential perspective on the experiences of television listening and viewing, Hilmes's concept of streaming seriality ultimately reinscribes the primacy of flow to television sound theory, while overlooking other factors important to the experience of television sound as it relates to precursors in radio that cannot be explained by an appeal to flow.16

Specifically, the idea of sonic flow regulating viewer interaction with the television set is grounded in implicit assumptions about the relative fidelity of the television set, particularly compared to the experience of theatrical cinema, television's other oft-noted technological predecessor. Indeed, it is precisely this assumption that underpins Ellis's 1982 claim that television sound should be understood as the medium's most significant form of sensory appeal due to the inferior quality of the television image. Ellis notes of sound's central role in the experience of television, a role that is the inverse of sound's allegedly subordinate function in theatrical cinema, that "the TV image tends to be simple and straightforward, stripped of detail and excess of meanings [. . .] the image becomes illustration, and only occasionally provides material that is not covered by the sound-track."17 Thus for Ellis, the primacy of sound to the experience of television is not due simply to the phenomenological divide between the acts of looking and listening—the driving assumption of Altman's model—but is related directly to television's image having been "stripped of detail," which, like flow, is also a unique characteristic of the American television system, which reproduced 100 fewer scanning lines (525 versus 625) than the Phase Alternating Line (PAL) standard used in European television.18

If flow requires television sound to guard against the possibility of viewer inattention, this is required because of the unstable and low-fidelity nature of television transmission itself as much as the possibility for household distractions posited by Altman as underpinning the need for sound to drive the experience of television. For example, in another Westinghouse television advertisement from 1950, a pair of spokesmodels touts the set's "synchro-tuning" capabilities, a feature necessary to correct for the possibility of optimal sound tuning interfering with optimal image tuning.19 Given that good sound and a good image, as the ad suggests, might have been mutually exclusive for historical television audiences, at least to some extent, is it important to explore the ways in which the likelihood of a low-fidelity television experience or variations in the levels of relative fidelity achieved by sound and image affects the possibility for continuously attentive spectatorship, something that was desired by audiences (imagine the picture suddenly cutting out in the final five minutes of a live anthology drama) and advertisers alike.

Despite the fact that he makes no reference to Understanding Media in discussing television sound, Ellis's claim that a stripped-down image is the driving force behind the way television functions as primarily a sound medium can be read as a reframing of McLuhan's earlier argument about television as a "cool medium." According to McLuhan, television's coolness is a product of this very same stripped-down image referenced by Ellis, which he juxtaposes with the "hotness" of radio sound and the filmic image. Williams's dismissal of McLuhan's position as being technologically determinist has marked most subsequent scholarship in television history, scholarship in which the presence of technological systems of recording, transmission, and reception are commonly treated as given rather than as distinguished by the same degree of cultural construction and historical contingency as television programming.20 Although McLuhan's positions are in need of contextualization and qualification, they still offer a useful starting point for explaining the importance of noise to a historically specific model of television spectatorship. In following the experience of televisual flow back to the aesthetic norms in McLuhan's earlier characterization of the medium's technical aesthetics, a different horizon for the experience of television sound can be discerned, one structured not around flow and constant attention but rather the penchant of sound to engender noise, discontinuity, and interruption.

Hot or Cold? Television, Radio, and Relative Fidelity

Unlike virtually all later theorists of television, McLuhan posited not continuity between television and radio aesthetics, but rather a degree of fundamental contradiction. Despite this, his characterizations of the relative fidelity of the televisual apparatus, in comparison to both cinema and radio, are important to understanding the way later sound theorists would take for granted certain aspects of the experience of watching television, namely the notion that television is an essentially low-fidelity medium. A close examination of his discussion in Understanding Media of television, radio, cinema, and how the three relate to one another makes this point clear. In perhaps the most widely cited passage from the section on television, McLuhan famously asserts that because the visual image of television is of such lower resolution than film, television produces an effect not unlike abstract art, as of the "some three million dots per second" transmitted to a television spectator, "he accepts only a few dozen each instant, from which he makes an image."21 Through its relatively low degree of fidelity to pro-televisual object or scene, television draws the spectator's attention to the constructed nature of the representation, thus inviting a "convulsive sensuous participation that is tactile and kinetic," and giving TV a "revolutionary" capability that is a function not simply of its omnipresent nature but of its technological aesthetics, specifically in the way they differ so markedly from projected film.22

While the distinction between film and television has been widely discussed by subsequent media theorists, the relationship McLuhan outlines between radio and television has been less remarked upon. He notes that radio, like film, is a "hot" or high-fidelity medium, meaning that the auditory experience of radio is one in which the entirety of a sound event is reproduced. Unlike television, which encouraged a collective mentality through its low-fidelity image, McLuhan suggests that "radio affects most people intimately, person-to-person, offering a world of unspoken communication between writer-speaker and the listener. That is the immediate aspect of radio. A private experience."23 As such, he asserts that "radio will serve as background-sound or as noise-level control as when the ingenious teenager employs it as a means of privacy. TV will not work as background. It engages you. You have to be with it."24 Although the juxtaposition of radio and television as being defined essentially by different modes of relative fidelity is a productive observation, it is, like so many of his claims, historically inaccurate. As McLuhan wrote this passage in 1964, his description may very well have been reflective of the experience of FM radio, which had finally begun to emerge from the shadow of AM systems by the early 1960s as FM become the preferred medium for the album-oriented rock music popular with youth audiences. However, the regime of static that characterized AM, which reigned as the dominant standard during the period when television experimentation began in earnest in the early 1920s, could have been expected to produce no such effect in the listener. Indeed, radio could hardly serve as "noise-level control" when it was itself a major source of background noise for the average listener of the AM era. Further, although McLuhan frames the idea of television's partial fidelity as an essentially visual concern, historical debates over television aesthetics ascribed a similar lack of fidelity to the medium's sound reproduction capabilities. In tracing the issue of noise as a historical concern from radio to television, the origins of television's coolness can be understood as a resolutely audiovisual concern, a concern not unlike those that drove the development of the telephone according to a logic identified by Jonathan Sterne as perceptual technics.

TV and Perceptual Technics

Perceptual technics emerged out of the shift from physiological acoustics to psychological acoustics during the early 20th century, as sound researchers sought to use technological instruments to theorize the nature of human audition. No longer viewed as simply a physical and physiological phenomenon, sound began to be understood in informatic terms, as a phenomenon that could be understood best via tools of measurement that avoided the variability and inconsistency of older observational methods. According to Sterne, perceptual technics is defined as "the specific economization of definition through the study of perception in the pursuit of surplus value," a worldview in which the measurement of human perceptual norms is used for the purpose of capitalistic exploitation of communication systems with flexible reproductive capabilities.25 Central to this process was the telephone, a technology that helped to standardize notions of sound as a medium for the transmission of information by facilitating the process of psychoacoustic measurement. In turn, the telephone was also put to use in service of perceptual technics as researchers at Bell Labs sought to maximize the volume of speech that could be transmitted along the finite physical space of the American telephone infrastructure. This model is not only applicable to aural phenomena, as the development of an NTSC standard for color television relied on the same kind of reasoning, one in which "compression, rather than verisimilitude" was the driving industrial rationale.26

Yet what both the examples of telephony and color television do not demonstrate is the extent to which communications systems may function to convey information via more than one sense, as in the pervasive coupling of sound and image that dominates much of the 20th-century media landscape, and how this can introduce different considerations into a perceptual regime that still privileges compression and profit maximization over strict verisimilitude. As the remaining sections of this essay explore, the first round of NTSC hearings, prior to those that decided a standard for color television, also operated according to a logic similar to that of Sterne's notion of perceptual technics, although these decisions were made according a logic in which perception was assumed to be multisensory, and the presence of noise was taken as a given part of the communication system. For this reason, it is important to consider precedents for noise in the American broadcast system, specifically in AM radio, against which later developments in television would be framed by the NTSC.

Radio and Noise

The problem of noise is central to debates in the history of radio, debates often effaced in discussions of the overlap between radio and early television, which was developed as the dominant definition of American radio was still up for grabs. Although histories of television sound typically rely on an implicit assumption of radio's normative identity being that of AM network radio, radio was hardly a medium with a singular aesthetic identity from its supposed standardization as a mass medium for one-way broadcast in the 1920s.27 In the 1920s, AM network radio emerged from a morass of competing models into a stable form, albeit a form that would be dogged by the problem of static for the better part of forty years.28

The overdetermined nature of radio's identity can be seen clearly in the challenge posed by FM to AM beginning in the 1930s, a moment of crisis that made tolerance for noise, and its official sanction by regulatory institutions, a fact of the American broadcast system. According to radio historians Christopher H. Sterling and Michael C. Keith, following the establishment of the major radio networks in the early 1920s,

On the surface, all seemed well, given radio's rapid rise to success over fifteen years. Naturally some were unhappy with one aspect or another of the increasingly commercial service, but one consistent complaint stood out: all too often, static interference or electrical noise would make radio listening nearly impossible. Electrical storms (those that produced lightening) could create crashing blasts of noise that all but wiped out any ability to appreciate radio's talk and music. In the semitropical southern states, static interference with radio signals was a chronic problem for much of the year. All radio receivers suffered from the problem, and there seemed to be no solution. How to overcome static was radio's chief technical dilemma.29

Ironically, this problem was endemic to the very same AM technology that had facilitated the construction of a national radio network in the first place. While allowing radio signals to travel distances great enough to justify infrastructural investment in radio as a national system, amplitude modulation also kept the sound of static tied to the sounds a system was intended to produce. That is, desirable and undesirable frequencies could not be parsed out from one another, with static frequencies rising or falling with the total volume of the system at a consistent signal-to-noise ratio of 30:1.30 Although the problem drew the attention of no less a person than NBC head David Sarnoff during this early period, according to Sterling and Keith, "by 1930, most of radio's technical people despaired of ever finding an answer."31

The possible solution to the problem of static in AM radio—frequency modulation—would come not through a scientific breakthrough, but rather through a reconsideration of the regulatory norms of the American broadcast system. The career of Howard Armstrong, who essentially single-handedly established the viability of FM technology, demonstrates the extent to which regulatory decisions can inscribe aesthetic identities onto a media apparatus, a fact that is central to understanding the relationship between television and radio. Like Lee De Forest, Philo T. Farnsworth, and other prodigies of early television technology, Armstrong made a private fortune from radio patents, eventually becoming the largest shareholder in RCA. Armstrong's innovation in FM, a technology abandoned by other researchers by 1930, was based not on any novel technological arrangement, but rather on the discovery that "trying to use FM in the narrow spectrum channels (10 kilohertz [kHz]) then assigned to radio stations was nearly worthless."32 Instead, Armstrong began to experiment in the wideband channels at much shorter wavelengths than standard AM channels, and by 1933 he had settled on a 200 kHz FM channel that was able to produce a signal-to-noise ratio of 100-to-1, results that dazzled his contemporaries.33 Yet, Armstrong's system was met with little interest by RCA, as it would necessitate an entire overhaul of the corporation's broadcast technologies, not to mention forcing a potentially industry-killing repurchase by consumers, a far cry from Sarnoff's preferred "black box" solution.34

The debate over FM would ultimately be decided twice by regulators, a process that would see static codified as the norm for radio sound until the early 1960s. In a hearing before the Federal Communications Commission (FCC) in 1940, Armstrong would argue his case for a shift to FM, over the objections of not only RCA but also television set manufacturers such as Zenith; both claimed that Armstrong's wideband system would interfere with the channel space planned for television. Despite these objections, Armstrong's suggestion of using the 41–50 MHz band for FM in 200 kHz channels was granted, giving the superior fidelity of FM transmission an apparent victory.35 The victory would be a temporary one. Although the FM industry grew rapidly in the two years leading up to America's entry into World War II, a point at which the commercial electronics industry shifted to the production of military equipment, AM still dwarfed FM, both in terms of number of sets in use and in terms of number of stations broadcasting. By mid-1944, "given increasing indications of rising postwar demand for all broadcast services," the FCC reopened hearings on spectrum allocation, and in early 1945, citing concerns over interference between the 41–50 MHz band occupied by FM and the soon-to-appear television stations situated immediately above this band, the commission shifted FM up to the 82–102 MHz band.36 The decision was ultimately a boon for AM giants NBC and CBS, and all but buried the FM industry until the early 1960s.

While fighting for an AM standard to preserve their business model, both NBC and CBS realized the diminishment of fidelity and increase in noise that this protocol engendered. As such, they sought to pursue FM, not as a sound-only protocol, but as a sound protocol to accompany the introduction of television. Seeking to avoid the original sin of static in the new medium, the networks and the FCC attempted to introduce FM television sound as a safeguard against the static that dogged the early history of radio. Despite FM's inherent capability for producing a clearer level of sound in comparison to AM, the realities of the broadcast environment, ranging from geographical impediments to set design, would see static emerge as the dominant aesthetic of early television, a historically contingent development with significant consequences for the development of practices of television spectatorship and auditorship.

Defining Noise in a Televisual Context

According to Lynn Spigel, noise was a major issue in public discussions taking place in the 1950s and 1960s over the aesthetic and cultural value of television, a tension explored by comedian (and former radio performer) Ernie Kovacs in his pioneering work at NBC, which dealt with the concept of television silence as a response to the medium's penchant for noise. Spigel notes,

During the 1950s newspaper critics and television viewers began to express displeasure for the high-pitched sales pitches of overzealous admen and the endless babble of TV programs. The public distaste for television noise in turn fueled industry and even regulatory efforts to appeal to quieter, more refined tastes [. . .] in this discursive context, noise was a real material problem (i.e. people actually thought TV was too loud) [. . .] in other words, "noise" was a metonym for all of television's failures—proof that the medium could never be art.37

Understood this way, noise works against the "source fidelity" of all-electronic television, which, Spigel observes, was touted as the medium's "greatest aesthetic virtue" by television critics in the 1950s.38 While this context is important for understanding the discourse of television sound during the 1950s and 1960s, when television became a widely established fact of American everyday life, it is equally important to note that a different conception of noise appeared in the technical discourse prior to this period, a discourse to which infrastructural engineering and signal processing are as important as assumptions about cultural integrity and verisimilitude that shaped later debates. Noise, as it relates to the experience of television, is a highly overdetermined concept, and thus other valences of the term are in need of historical elaboration.

While television was a definite source of cultural noise during the 1950s, it was also a source of technical noise. As such, another way of understanding noise as it relates to the fidelity of the television system is through the technological models of midcentury communications research, specifically those produced by the scientists of Bell Labs. According to the seminal theorizations of researchers Claude Shannon and Warren Weaver, all communication systems can be reduced to channels for the transmission of information, systems in which noise exists as a permanent feature of signal processing. Although Shannon and Weaver's theories have been primarily understood in relation to Bell's work in telephony, the company and its researchers had a longstanding interest in television, an interest reflected in Shannon and Weaver's writings.39 In his 1948 treatise "A Mathematical Theory of Communication," Shannon asserts that the goal of a communication system is not necessarily to eliminate noise entirely, but rather to figure out "ways of transmitting the information which are optimal in combating noise."40 In the case of a continuous signal, like television, that is relayed through a channel with "a certain amount of noise, exact transmission is impossible."41 Expanding on the assumptions underpinning Shannon's argument, Weaver defines noise as "unwanted additions" to a signal, including "static (in radio) [. . . and] distortions of shape or shading in picture (in television)."42 That is, noise is the presence of unintended and undesired information in the transmission, reception, and display of a television broadcast, what the NTSC would identify also as either "static" or "interference."

Viewing noise in these terms, we can see that the critical sensibility McLuhan attributes to television's low-fidelity image was also a product of its low-fidelity sound, a fact rooted in the fundamental limits of radio in the 1940s and 1950s. These limits depended as much on surplus information—the presence of static or noise viewed as an addition to the system, as in Shannon and Weaver's formulation—as on a paucity of information—the way in which McLuhan understands television's image. Although the problem of noise can be seen in the discourse around experiments in televisual transmission dating as far back as the early 1920s, the NTSC hearings of 1940 and 1941, which emerged following the success of researchers at RCA in developing a relatively high-definition image standard of more than 500 lines, would codify this notion of noise as part of the standardized aesthetics of American television, with sound expected to compensate for an inherently noisy and unstable image. As a result, noise became the defining aesthetic feature of television during the broadcast era. In part, noise is important because it qualifies the idea of flow, by suggesting that the experience of television viewing cannot be reduced simply to the kind of textual immersion flow is said to produce. Also, the centrality of noise to the NTSC-era experience of television viewing puts into relief the eventual transition to HDTV and multichannel sound that began in the 1970s, which can be seen as attempting to overcome the regime of noise, and thus the attendant possibility of viewer distraction, in favor of a different televisual ontology.

NTSC Standards as Rationalization of Perceptual Experience

In contrast with the haphazard development of national standards for radio during the 1920s and 1930s, the federal government hoped to roll out television in a more orderly fashion, in no small part to avoid an outcome like the one in which radio networks standardized the use of a technical protocol that was capable of only low-fidelity sound transmission. The retrospective development of the Federal Radio Commission in 1927—five years after the creation of Class B licenses—which allowed for the operation of high-powered stations capable of much greater geographic coverage, cordoned off amateur operators from the corporate radio business, and facilitated the formation of national radio networks, was unable to prevent AM from entrenching itself as the dominant transmission standard, keeping the higher-fidelity FM standard from reaching widespread use once it became a viable option. With the benefit of immediate hindsight on this very recent failure of regulatory intervention into the national communications infrastructure, Congress attempted to stay ahead of the development of television by passing the Communications Act in 1934, right after the first boom in mechanical television—which yielded systems capable of producing no more than 240 lines of horizontal resolution—had given way to the new higher-resolution all-electronic regime pioneered by RCA.43 The Communications Act created a new regulatory entity, the FCC, which was responsible for overseeing both television and radio and was tasked with ensuring that the free-for-all of the early radio period would not reoccur with the immanent emergence of television. One of the major tasks of the FCC during this period was to negotiate the competing aesthetic standards for television, at the levels of both sound and image. During the process of standard-setting for early television, television aesthetics as codified by the NTSC were a matter of negotiating the relative levels of image and sound quality available within the respective technologies of radio sound and television image, an ideal that ultimately differed substantially from the actual experience of television. Although television technicians aspired to the level of "naturalness" of photographic and optical sound cinema, NTSC ultimately codified a set of standards in which a discourse of fidelity gave way to a discourse of adequacy, a discourse in which noise figured as a central part of the television experience.

The rationale offered by the NTSC for adopting standards prior to television's commercialization stressed the new medium's inherent affinities with radio, even though this was done in a way that largely overlooked the sonic aspects of television transmission. According to a summary of the history of television regulation published with a transcription of the NTSC's recommendations, "television is an offshoot of sound broadcasting, part and parcel of the radio industry," an industry marked by "all too vivid a memory of the chaotic conditions in the early days of broadcasting," including the rushed adoption of the noise-plagued AM standard.44 The relationship between radio and television posited by the committee's underlying logic was not one of strict homology, though, but rather one predicated on a "demand [for] a greater degree of standardization than is required for sound broadcasting."45 That is, what the committee referred to as the "lock-and-key" relationship required between sender and receiver marks a significant difference between sound broadcast and image broadcast. However, what the committee presented as an innate difference between the two practices actually exists as an assumption about fidelity, the degree to which a representation must adhere to a degree of original likeness, reproduce the natural experience of the human sensorium, and/or replicate the experience of existing media, specifically cinema, in order to be considered adequate, at least for the purpose of commercial exploitation. What is at stake in framing these claims about the need for broadcast regulation is a claim about the degree of interference or noise acceptable within the televisual image, a claim that would rely on an implicit understanding of the "subjective aspects" of audiovision, one of nine subtopics the committee was tasked with addressing.46

Discussions of the subjective aspects of television viewing in the NTSC report are heavily marked by reference to a discourse of fidelity, a discourse that invokes theatrical cinema as the standard to which television should aspire. The assumptions underpinning the subjective aspects of the standards prescribed for the television system's visual characteristics often refer to the preservation of an original event, television's documentary function, as the most important aspect of the representation, a discourse characterized by the use of terms like "appropriate," "pleasing," and "natural." For example, the discussion of aspect ratio invokes the empirical dimensions of human vision, such as the orientation of isopters in retina along a relatively elongated horizontal axis, situating television within a "long artistic experience" as justification for adopting a ratio of width to height "between 5:4 and 4:3," a range nearly identical to that of contemporary theatrical cinema.47 Likewise, use of perspective and creation of depth of field are suggested as being entirely continuous with the practice of photographic cinema, with the creation of an effect of "naturalness and adequacy of reproduction of pictures of persons at rest or in normal motion" the desired outcome.48

But despite this seeming concern for the empirical aspects of human perception rendered mostly via appeals to replication of the norms of theatrical cinema and its constituent technologies, television was forced to account for the problems germane to live broadcast transmission, such as electrical interference, ghosting, and blurring, as well as practical realities of an imagined group viewing situation such as relatively small and fixed image size and spectators arrayed across a wide range of viewing positions. Unlike cinema, which existed as a stable object projected within a continuous physical space, television's reliance on the broadcast spectrum necessitated accounting for a host of visual deficiencies, nearly all of which were discussed in terms of maximally acceptable limits rather than their possible elimination. For example, ideal picture resolution is acknowledged to be a "compromise between technical excellence and cost." Rather than aspiring to a model of 35mm or even 16mm cinema, image quality is framed in terms of dots per inch (dpi) of a photoengraving. While the NTSC report cites 150 dpi as the norm for a "very good half tone image," 75 dpi is deemed an adequate resolution "considering the distance at which television pictures are viewed at present," because it represents the point at which the grid-like arrangement of discrete dots is no longer visible.49

Similarly, undesirable characteristics are not seen as features to be eliminated entirely, but rather to be pushed to a minimum perceptible threshold. Of particular importance in the committee's discussion are the concepts of "interference and noise." The report notes that interfering signals "result when a second (and generally weaker) interfering picture is simultaneously received with the desired signal or when impulsive electrical disturbances (either natural or man-made) produce white or black relatively small spots."50 The signals are categorized on the basis of whether the interference is due to echo, single-frequency noise, or random noise, and in each case are considered irrelevant below varying signal-to-noise ratios ranging from 50:1 to 25:1.

The discussion of sound in the NTSC report, though much less extensive than discussions of visual standards, evidences the extent to which sound was expected to offer a perceptual complement to an image marked by partiality, limitation, and lack of fidelity. Of the twenty-two standards established by the report, a mere two prescriptions relate to sound. Among myriad guidelines for number of scan lines, image stabilization, refresh rate, brightness, aspect ratio, contrast ratio, gradation, interference, and other visual standards, television sound was reduced to a duet of principles:

13. It shall be standard to use frequency modulation for the television sound transmission.

14. It shall be standard to pre-emphasize the sound transmission in accordance with the impedance-frequency characteristic of a series inductance-resistance network having a time constant of 100 microseconds.51

While the latter standard demonstrates the finite and idiosyncratic levels of attenuation necessary to minimize perceptible interference at the point of televisual reception, the former standard speaks to the kind of complex work done by sound in television, not simply as an isolated form of phenomenological experience, but as part of an audiovisual communication system. According to the report, in deciding between an AM and an FM standard for television sound, "it was not clear that FM for television sound could be judged purely on its merits as a sound broadcasting medium, since its inclusion in the television channel might have other effects."52 Even though FM was widely understood by regulators of the early 1940s to be the superior standard for sonic transmission, the NTSC's decision to select it over AM reflected the multifaceted role of sound within the larger discourse of broadcast television aesthetics.

Rather than refer to the quality of sound itself offered by FM sound transmission, the NTSC report stressed the way FM would contribute to the overall television system, as part of a perceptual regime in which radio sound combined with the television image to produce a desired level of quality in spite of inferiorities that discussions of subjective aspects had argued could be rendered "imperceptible" or "unnoticeable" as isolated variables. In part, FM was desirable because it would provide a scale-level cost savings. Even though FM receivers were more expensive to produce, the relatively lower power demands of FM transmission meant that less energy was required on the transmission end, which would also produce a savings in terms of equipment capacity. But most of all, FM was prized for its ability to improve signal-to-noise ratio. According to the report,

The f-m [sic] sound transmitter with one-half the carrier rating of the a-m [sic] transmitter will give an improvement in signal-to-noise ratio of at least 17 db [. . .] the signal-to-noise ratio improvement of at least 17 db is extremely important, since this is an over-all system gain of such great importance as to far outweigh any incidental apparatus advantages or disadvantages of the transmitter or receiver. This is especially true in light of past experience, which indicates that considerable degradation of the picture will be tolerated if the accompanying sound is absolutely free from the usual objectionable noises.53

So, despite the fact that the overwhelming majority of the NTSC report is dedicated to discussing problems with the televisual and solutions to these problems through filters, buffers, offsets, and other technological containment strategies, the committee ultimately expected FM sound to compensate for what it knew would be a not insignificant amount of noise and interference experienced at the reception end by a consumer base that had been slow to adopt radio precisely due to its unreliable nature.54

Conclusion

The codification of maximally acceptable limits for noise in the televisual image outlined in the NTSC report and sound's imagined role in mediating the experience of televisual noise recalls earlier debates in telephone research, which Sterne argues offer key insights into understanding the role that communications infrastructures have played in shaping the cultural functions of media. For AT&T, maximization of revenue from its telephone monopoly depended on its ability to measure and commodify the human sensorium, a practice enacted by designing microphones and speakers to be used in telephonic transmission that would transmit only the frequencies necessary for the production of intelligible speech. That is, in order to fit as many calls as possible along a wire with a finite amount of capacity, AT&T sought to minimize the transmission of nonessential frequencies, frequencies identified by the company's research in the field of psychoacoustics, to generate surplus value from the gap between speech production and cognition. In assessing the ramifications of perceptual technics for a theory of political economy of media, Sterne notes,

Like psychotechnics, ergonomics, and human factors, perceptual technics owes a political and philosophical debt to Taylorism. It also owes a debt to corporate liberalism, although the ubiquity of perceptual coding today suggests it is equally at home in neoliberal and global economies. Perceptual technics involves corporations, engineers, and other managing technologies in relation to statistical aggregates of people. AT&T's innovation was thus to focus its application of psychology to industry at the threshold of perception, to give that threshold an economic value, and to build a series of research problems, research methods, and industrial techniques around the pursuit of that value [. . . . L]ike ergonomics, perceptual technics is an ambivalent innovation. One may be tempted to feel nostalgic for a prior moment in history when the human body itself was unalienated and was not subjected to politics or economy, but that would amount to nostalgia for the Middle Ages. As hearing research moved toward living subjects, even to objectify them, there was a new level of social engagement and exchange.55

Though the concept of perceptual technics was introduced in relation to a specific regime of sound research, a paradigm that functions according to an elimination of information flows, I argue that NTSC's strategy of perceptual adequacy via the codification of noise as a normal aspect of the television experience amounts to a similar "ambivalent innovation," an innovation that underpins the hot-cold binary in which McLuhan implicated postwar American television vis-à-vis radio. For decades, cultural critics treated broadcast television as an aesthetically inferior medium, with its technically limited capacity for verisimilar representation in comparison to theatrical cinema standing metonymically for its larger lack of redeeming social value, a notion typified by FCC chairman Newton Minow's famous characterization of television as a "vast wasteland" in 1961. However, television's status as a low-fidelity medium is not simply a product of its impoverished data flow in comparison to either radio or cinema, but is also a product the standardization of surplus data, noise, as a routine part of the television experience. As much as the domestic setting emphasized by sound theorists, noise is a feature that threatens to constantly unsettle the attentive viewing that underpins the political economy of American television.

Although the NTSC was expected to decide on a set of standards capable of producing a televisual experience of clarity, reliability, and fidelity in comparison to the often indistinct, distorted, and contingent experience of AM radio sound, FM sound would ultimately fail to serve as the panacea for the built-in deficiencies of television's visual image. As Max Dawson has demonstrated, for many Americans during the 1950s, especially those living outside of major metropolitan areas, static was the most common form of signal to come across the televisual receiver.56 In an inverse of the interference problem posited by the NTSC report, in which committee members expressed concern over sound contaminating the image through interference, VanCour notes that the picture signal often interfered with the accompanying sound signal, leading to an FCC-mandated drop in power on the sound subcarrier during the late 1950s. Of the practical realities of television sound broadcast and reception, he remarks,

Technological possibilities for early television sound were further complicated by a wide variability in the range of frequencies reproducible in this medium. On the transmission end, landlines connecting early network stations were at best capable of passing frequencies only up to 8,000 Hz, often considerably lower. While programming originated by local stations could offer high-fidelity audio, those received via network feed thus offered little to no improvement over standard AM radio broadcasts and fell well short of the full 15,000 Hz ceiling for which period transmitters were certified.57

Despite the best efforts of the NTSC, noise persisted as a problem resistant to filtration, buffers, cutoffs, and other strategies employed to keep unwanted signals from reaching television users, problems that would continue to preoccupy television researchers into the late 1940s and early 1950s.58 Indeed, as Jeffrey Sconce notes, the expressive vagaries of the televisual apparatus would become narrativized by shows like The Outer Limits (ABC, 1963–1965), which played on the widespread penchant of television sets to display static, interference, after-images, and other seemingly phantasmal residues.59

In looking back at the roots of this phenomenon in the NTSC hearings, as well as the afterlife of noise in television, we can see a different version of broadcast television sound's relationship to both visual and sonic noise, a version that qualifies our understanding of the necessity of "flow." In contrast to the notion that broadcast television has an innate affinity for seriality and continuity as a realist medium, noise emerges as an alternate televisual ontology, an ontology that reflects broadcast television's development via technologies of radio. If flow's significance can be said to explain how television functions in service of corporate liberal ideology, noise explains the potential for television to work against these very same constraints by providing the possibility for the television viewer to be distracted, inattentive to, or distanced from the television set as a fact of the television signal itself. As Sterne observes, practices of media compression "often begin close to economic or technical considerations, but over time they take on a cultural life separate from their original, intended use."60 Noise stands as one such feature that defines the cultural life of television in ways largely unrelated to the original technical and economic considerations to which it was bound.61

The notion that noise promotes, in Sterne's terms, "social engagement" with the televisual apparatus is in line with how sound theorists have understood noise to function within the political economy of attention and experience, a position best captured in the work of Jacques Attali. For Attali, noise and its inverse—music—form a fundamental dialectic of social reality, "the audible waveband of the vibrations and signs that make up society."62 Given that this dialectic functions as one of the "structuring differences" at the core of organized society, Attali argues that "any theory of power today must include a theory of the localization of noise and its endowment with form."63 The NTSC reports represent precisely this kind of attempt to endow noise with form, to turn electromagnetic frequencies into meaningful sounds and images that impose order on the material world. However, in also preserving residual noise, the aesthetic regime of television that emerged as a result of the medium's encounter with radio up through the NTSC hearings produces an awareness of the constructed nature of the televisual experience. The centrality of noise to the video art of Nam June Paik, perhaps most clearly on display in his Beatles Electroniques (1969), shows the possibility for televisual noise to function expressively rather than simply as a barrier to a more perfect verisimilitude.

Beyond offering an important historical context for the emergence of television in the 1940s and 1950s, noise also provides a way to think about the divide between network-era American television and contemporary television, which, as the result of more than thirty years of research into higher-fidelity technologies, has undergone an aesthetic overhaul, one that has rendered the perceptual experience of television today dramatically different than it was during the network era. If early television was defined through what we might call an aesthetic of adequacy, an aesthetic in which standards for televisual fidelity represented a compromise between ideals of realism and verisimilitude and practical considerations for the variable stability of electronic transmission and representation, the development of new protocols for television image and sound, especially those identified and promoted by the Advanced Television System Committee, represent a key point of rupture with this old regime. HDTV has been framed by cultural critics as an improvement on older aesthetic models of television, as Michael Newman and Elana Levine argue in noting HDTV's central function to the cultural legitimation of contemporary television. By examining the way advertisements for HDTV have circulated since the technology's introduction as a consumer phenomenon in the mid-2000s, Newman and Levine assert that one of the most significant aspects of HDTV's increased picture quality is the way in which it serves frame television as masculine and elite in contrast to the medium's lower-class and feminized origins. However, it may be more correct to view HDTV not simply in terms of an increase in signal quality or volume, but rather as a protocol for the elimination of noise.64 As I have argued elsewhere, HDTV represents an attempt to rethink the ideal experience of television in a way that avoids many of the perceptual limitations of old TV by modeling the television image after 35mm film, thus interpolating the viewer into an experiential regime characterized by a higher degree of attention and the elimination of technical defects likely to cause viewer distraction.65

Much in the way that Dawson has asserted that the digital video recorder (DVR) reflects the neoliberal imperative that contemporary television viewing be efficient, liberating the viewer from the inflexibility of the television schedule, HDTV similarly presents itself as an improvement over network-era aesthetic standards, making the television set a suitable interface not only for television programming, but also for streaming video services, Blu-ray discs, and advanced gaming consoles.66 Despite attempts to render new television distinct from old television through what Dawson has elsewhere referred as an "aesthetic of efficiency" via new shorter video forms intended to be consumed on small and mobile screens, new forms of television viewing, all of which are based on protocols for digital compression, have preserved noise as a feature of contemporary video forms, and routinely draw attention to the technical nature of television viewing, not least through "buffering," an experience familiar to anyone who has attempted to watch television on a handheld mobile device.67 Even if wider aspect ratios, greater image density, multiple sound channels, and more artistic programming have seen contemporary television pushed toward the same kind of legitimate cultural status as cinema, television-as-radio continues to serve as the dominant metaphor within television history, with the persistence of noise in the digital age standing as a constant reminder of this. In order to keep this analogy from being reduced to a hollow truism, though, it is imperative that the nature of television's relationship to radio be adequately historicized, and that television historians also remain sensitive to the multiplicity of meanings made possible by this and other intermedial constellations of the television apparatus.

Thanks to Lynn Spigel, Jacob Smith, Mimi White, Doron Galili, and the journal's anonymous reviewers for useful feedback and comments on earlier versions of this essay.

About the Author

Luke Stadel is a media historian who specializes in the history of media technologies, especially the history of American television. Approaching media from the perspective of apparatus, infrastructure, and technological assemblage, his research attempts to unpack the deep histories of everyday media technologies and to discover the meanings embedded in technologies before they reach consumer audiences, as well as the multiple and varied uses to which media technologies are put, especially in professional and specialized settings. His work on the cultural history of media technologies has been published in Cinema Journal; Quarterly Review of Film and Video; Early Popular Visual Culture; Music, Sound, and the Moving Image; Spectator; and Popular Communications.

Endnotes

1 Perhaps the first such instance is Rudolf Arnheim's Radio (New York: Arno Press, 1971 [1936]), which remains a touchstone for contemporary television history and theory. For one particularly notable recent example, see Rick Altman's invocation of television as "radio with images" in Silent Film Sound (New York: Columbia UP, 2004), 16. According to Altman, this particular semantic constellation is justified because "every television contains a radio."

2 A partial list of recent monographs that treat radio and television as a single regime in the context of broadcasting would include William Boddy, Fifties Television: The Industry and Its Critics (Urbana: U of Illinois P, 1991); Douglas Gomery, A History of Broadcasting in the United States (New York: Wiley-Blackwell, 2008); Robert McChesney, Telecommunications, Mass Media, and Democracy (Oxford: Oxford UP, 1994); Paddy Scannell, Radio, Television and Modern Life (New York: Wiley-Blackwell, 1996); Hugh M. Slotten, Radio and Television Regulation: Broadcast Technology in the United States, 1920–1960 (Baltimore: Johns Hopkins UP, 2000); Susan Smulyan, Selling Radio: The Commercialization of American Broadcasting, 1920–1934 (Washington, DC: Smithsonian Institution Press, 1994); Thomas Streeter, Selling the Air: A Critique of the Policy of Commercial Broadcasting in the United States (Chicago: U of Chicago P, 1996).

3 Also worth noting is the significant influence of other sound media including the telephone, phonography, and cinema on the historical development of television, a fact overlooked by histories that privilege the idea of dominant practice in television history. All of these are explored as case studies in Luke Stadel, "Television as a Sound Medium, 1922–1994." (PhD diss., Northwestern University, 2015).

4 Westinghouse television commercial, 1950, https://www.youtube.com/watch?v=sdKejhO9NWU. Thanks to Lynn Spigel for sharing this ad with me.

5 Shawn VanCour, "Television Music and the History of Television Sound," in Music in Television: Channels of Listening, ed. James Deaville (New York: Routledge, 2011), 57–79; Philip J. Sewell, Television in the Age of Radio: Modernity, Imagination, and the Making of a Medium (New Brunswick: Rutgers UP, 2014); NBC: America's Network, ed. Michelle Hilmes (Berkeley: U of California P, 2007)

6 For another recent discussion of the idea of television as "illustrated radio," albeit one that ultimately affirms this concept in relation to contemporary developments in technologies of the television image, see Anthony Enns, "The Acoustic Space of Television," Journal of Sonic Studies 3.1 (Oct. 2012), http://journal.sonicstudies.org/vol03/nr01/a08.

7 Marshall McLuhan, Understanding Media: The Extensions of Man (Cambridge: MIT Press, 1994 [1964]), 308–37.

8 Raymond Williams, Television: Technology and Cultural Form (New York: Routledge, 2003 [1974]), 77–120.

9 Rick Altman, "Television/Sound," in Studies in Entertainment: Critical Approaches to Mass Culture, ed. Tania Modleski (Bloomington: Indiana UP, 1986), 39–54.

10 John Ellis, Visible Fictions: Cinema, Television, Video (London: Routledge, 1982), 128.

11 It is worth noting John Caldwell's strident disagreement with Altman, in which he asserts that all of the six functions ascribed to television sound by Altman "are also operative within television's imagery as well." Televisuality: Style, Crisis, and Authority in American Television (New Brunswick: Rutgers UP, 1995), 158.

12 Michel Chion, Audio-Vision: Sound on Screen, trans. Claudia Gorbman (New York: Columbia UP, 1994 [1990]), 157–68.

13 Michelle Hilmes, "Television Sound: Why the Silence?" Music, Sound, and the Moving Image 2.2 (Autumn 2008): 160. doi:10.3828/msmi.2.2.10

14 Ibid., 153, 159.

15 Ibid., 158.

16 For another pointed critique of flow, see Mimi White, "Flows and Other Close Encounters with Television," in Planet TV: A Global Television Reader, ed. Lisa Parks and Shanti Kumar (New York: NYU Press, 2003), 94–110.

17 Ellis, Visible Fictions, 129.

18 Both mechanical and electronic television systems were based on the principle of scanning outlined first by Paul Nipkow in his 1884 patent for an "electric telescope." Although the NTSC was used widely on an international basis during the 1940s and 1950s, regionally and nationally specific alternatives, such as PAL, helped to solidify the NTSC as a uniquely American protocol, as it was used continuously in the United States for over sixty years. PAL was introduced in Western Europe and Russia in the early 1960s as an alternative to the NTSC color protocol, both in terms of scan line count and in terms of stability of color reproduction. French and Eastern European television adopted the SECAM standard in 1967, which also used a 625-line protocol. See John P. Freeman, "The Evolution of High-Definition Television," Journal of the Society of Motion Picture and Television Engineers 93.5 (May 1984): 492–501.

19 Westinghouse television commercial, 1950, https://archive.org/details/westinghouse1950televisonset. For a discussion of the practice of sound-first tuning in the early broadcast era, see Stadel, "Television as a Sound Medium," 161–62.

20 For Williams on McLuhan's alleged technological determinism, see Television, 129–32.

21 McLuhan, Understanding Media, 313.

22 Ibid., 314.

23 Ibid., 299.

24 Ibid., 311–12.

25 Sterne, MP3: Meaning of a Format (Durham: Duke UP, 2013), 53.

26 Jonathan Sterne and Dylan Mulvin, "A Low Acuity for Blue: Perceptual Technics and American Color Television," Journal of Visual Culture 13 (2014): 118–38. doi:10.1177/1470412914529110

27 FM has typically been discussed by media scholars as part of stereo culture of the 1960s rather than as continuous with traditional broadcasting. See, for example, Tim J. Anderson, Making Easy Listening: Material Culture and Postwar American Recording (Minneapolis: U of Minnesota P, 2005)

28 According to Susan Douglas, all the features of the American broadcast system were in place by 1922. Inventing American Broadcasting, 1899–1922 (Baltimore: Johns Hopkins UP, 1987).

29 Christopher Sterling and Michael C. Keith, Sounds of Change: A History of FM Broadcasting in America (Chapel Hill: U of North Carolina P, 2008), 16.

30 Ibid., 22.

31 Ibid., 16.

32 Ibid., 19.

33 Ibid., 21–22.

34 According to Sterling and Keith, "the public already had some $3 billion invested in 40 million AM receivers, broadcasters had expended about $75 million in the more than 800 AM stations on the air, and radio advertisers were spending some $170 million annually to advertise over those stations." Sounds of Change, 34.

35 Ibid., 31–35.

36 Ibid., 55–66.

37 Lynn Spigel, TV by Design: Modern Art and the Rise of Network Television (Chicago: U of Chicago P, 2008), 179–80.

38 Ibid., 181.

39 On the influence of the telephone on television during the experimental period, see Stadel, "Television as a Sound Medium," 50–97.

40 Claude Shannon, "A Mathematical Theory of Communication," Bell System Technical Journal 37/38 (July/Oct. 1948): 407.

41 Shannon, "A Mathematical Theory of Communication," 646.

42 Warren Weaver, "Recent Contributions to the Mathematical Theory of Communication," in The Mathematical Theory of Communication (Urbana: U of Illinois P, 1949), 99.

43 For a discussion of the period of mechanical scanning, see Albert Abramson, The History of Television, 1880 to 1941 (Jefferson, NC: McFarland, 1987); R. W. Burns, Television: An International History of the Formative Years (London: Institution of Electrical Engineers, 1998); and Joseph H. Udelson, The Great Television Race: A History of the American Television Industry 1925–1941 (Tuscaloosa: U of Alabama P, 1982).

44 Donald G. Fink, ed., Television Standards and Practice: Selected Papers from the Proceedings of the National Television System Committee and Its Panels (New York: McGraw-Hill, 1943), 1.

45 Ibid., 2.

46 Ibid., 15–16.

47 Ibid., 48–51. Although 1.33:1 was the standard aspect ratio for silent cinema, the introduction of optical soundtracks saw the image squeezed to a ratio closer to 1.20:1. Eventually, Hollywood would settle on a ratio of 1.37:1, until the rise of television saw the introduction of wider aspect ratios as standard industry practice during the mid-1950s.

48 Ibid., 86–89.

49 Ibid., 62–63.

50 Ibid., 74–76.

51 Ibid., 20.

52 Ibid., 155.

53 Ibid., 160–61 (emphasis added).

54 Sterling and Keith, Sounds of Change.

55 Sterne, MP3, 53–55.

56 Dawson, "Reception Problems: Postwar Television and the Amateur Experimenter," presentation at Society for Cinema and Media Studies Annual Conference (Park Plaza Hotel, Boston, Mar. 22, 2012), http://maxdawson.tv/2012/05/28/receptionproblems/.

57 VanCour, "Television Sound and the History of Television Music," 60–62.

58 Pierre Mertz, "Perception of Television Random Noise," Journal of the Society of Motion Picture and Television Engineers 54 (Jan. 1950): 8–34; doi:10.5594/j06377 A. D. Fowler, "Observer Reaction to Video Crosstalk," Journal of the Society of Motion Picture and Television Engineers 57 (Nov. 1951): 416–24. doi:10.5594/j17078 Significantly, research on levels of tolerable noise in television transmission was conducted not by researchers at RCA or other labs affiliated with television producers, but rather by Bell Labs, which managed the wired infrastructure that underpinned the broadcast system. On the important role of Bell Labs in developing the infrastructure of American television, see Jonathan Sterne, "Television Under Construction: American Television and the Problem of Distribution, 1926–62," Media, Culture & Society 21 (1999): 503–30. doi:10.1177/016344399021004004

59 Jeffrey Sconce, Haunted Media: Electronic Presence from Telegraphy to Television (Durham: Duke UP, 2000), 124–66.

60 Sterne, MP3, 5.

61 For another example of polymorphous valences of noise in relation to cultural and technical organization of a new sound medium, see Mara Mills, "Deafening: Noise and the Engineering of Communication in the Telephone System," Grey Room 43 (Spring 2011): 118–43.

62 Jacques Attali, Noise: The Political Economy of Music, trans. Brian Massumi (Minneapolis: U of Minnesota P, 1985 [1977)].

63 Ibid., 5–6.

64 Michael Z. Newman and Elana Levine, Legitimating Television: Media Convergence and Culture Status (New York: Routledge, 2011).

65 Luke Stadel, "From 35mm to 1080p: Film, HDTV, and Image Quality in American Television," Spectator 32.1 (Spring 2012): 31–36.

66 Max Dawson, "Rationalizing Television in the USA: Neoliberalism, the Attention Economy, and the Digital Video Recorder," Screen 55.2 (Summer 2014): 221–37. doi:10.1093/screen/hju011

67 Max Dawson, "Television's Aesthetic of Efficiency: Convergence Television and the Digital Short," in Television as Digital Media, ed. James Bennett and Niki Strange (Durham: Duke UP, 2011), 204–29.